Abstract

Sentiment analysis is a domain of natural language processing (NLP) focusing on interpreting emotions, attitudes, and sentiments primarily from written text. However, recent research has extended this analysis to include audio and visual data, creating a burgeoning field known as Multimodal Sentiment Analysis (MSA). MSA is an evolving discipline dedicated to comprehending emotional expressions and sentiments in not only text but also acoustic and visual inputs. Many MSA models have been built in recent years with different frameworks \cite{lai2023multimodal}, from statistical non-machine learning techniques to complex neural networks. However, not many focus on generalization performance, which is the ability to predict unprecedented data. This project focuses on using a pre-trained Long Short-Term Memory (LSTM) based network together with Tensor Fusion Network (TFN) and Select-Additive Learning (SAL) algorithm to improve the model’s generalizability.

Data Architecture Diagram

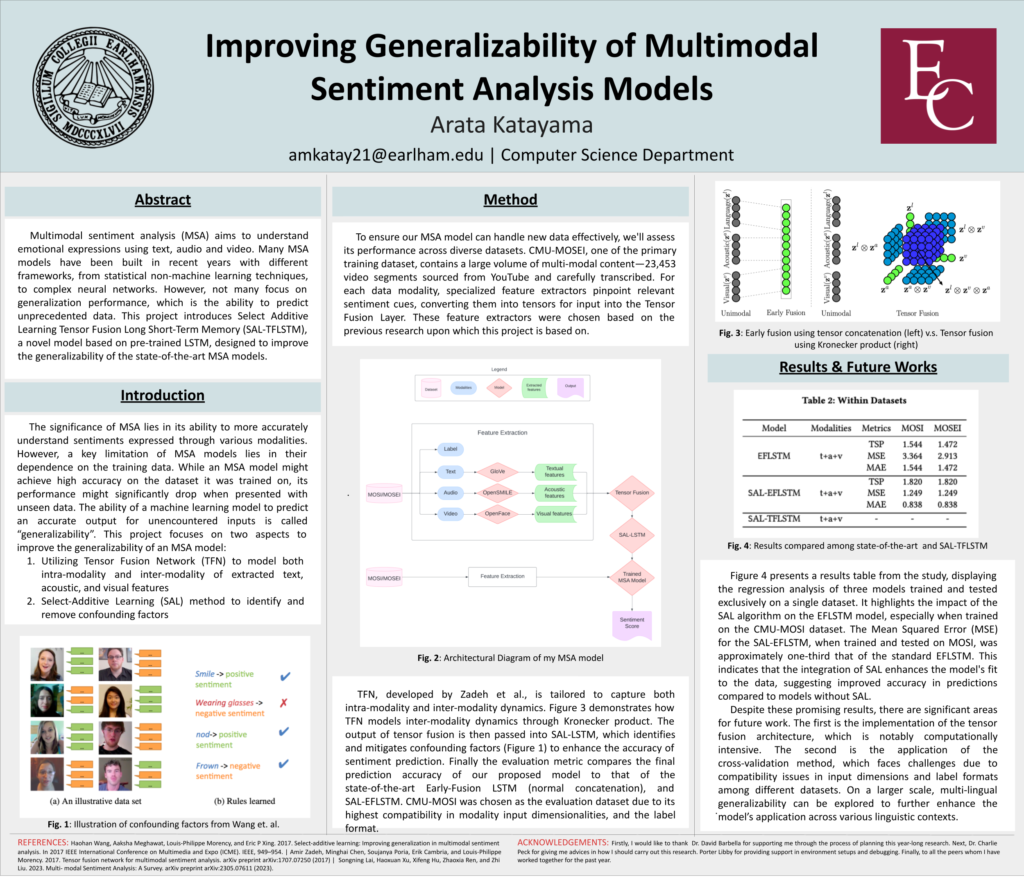

Poster