The new (or should I say, old) Kinect finally arrived today, and plugging it into one of the USB 2.0 ports gives me the following USB devices:

Bus 001 Device 008: ID 045e:02bf Microsoft Corp. Bus 001 Device 038: ID 045e:02be Microsoft Corp. Bus 001 Device 005: ID 045e:02c2 Microsoft Corp.

. . . which is still not completely identical to what freenect is expecting, but more importantly, I was finally able to run one of the freenect example programs!

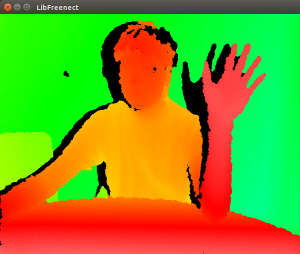

This is one-half of the freenect-glview program window, which shows the depth image needed to parse the body and subsequently the hand. I then dived into the tools that the XKin library provides, helper programs that let the user define the gestures that will be recognized by another program. With some experimentation, along with re-reading the XKin paper and watching the demo videos, I found out that the XKin gesture capabilities are more limited than I thought. You have to first close your hand to start a gesture, move your hand along some path, and then open your hand to end the gesture. Only then will XKin try to guess which gesture from the list of trained gestures was just performed. It is a bit of an annoyance since conductors don’t open and close their hands at all when conducting, but that is something that the XKin library can improve upon, and I know what I can work with in the meantime.

Leave a Reply

You must be logged in to post a comment.