CS488 – Elevator Pitch

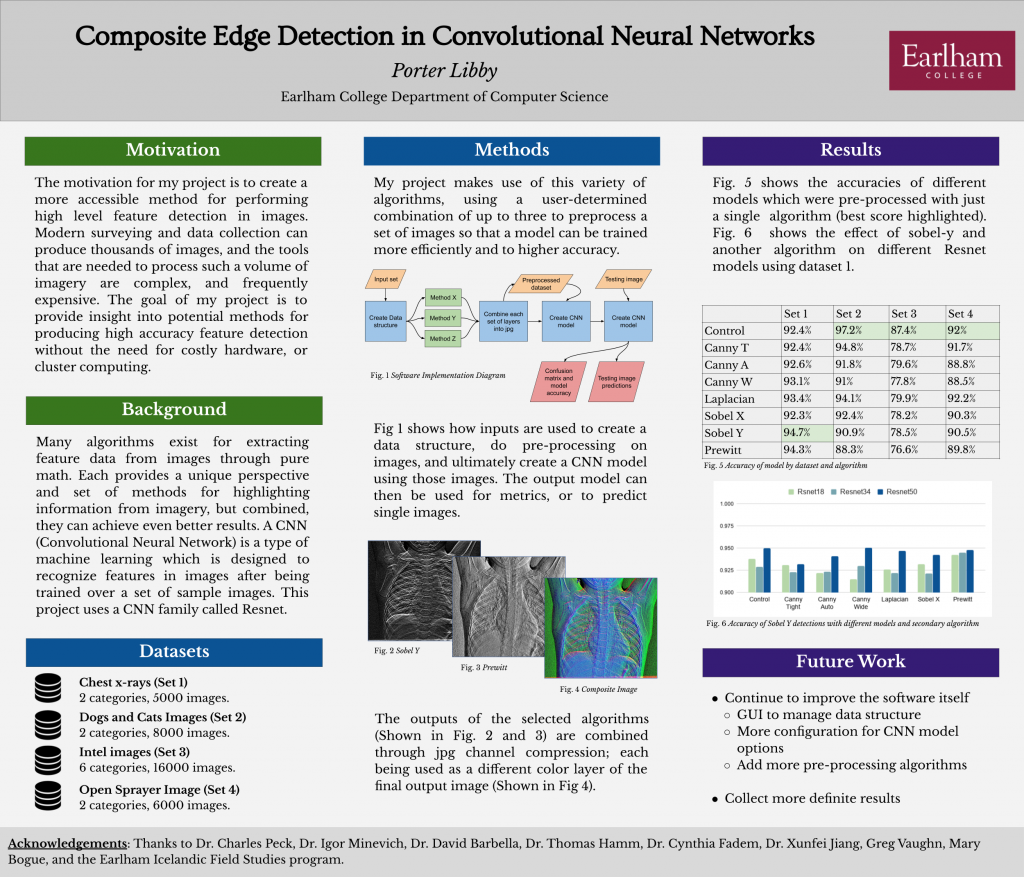

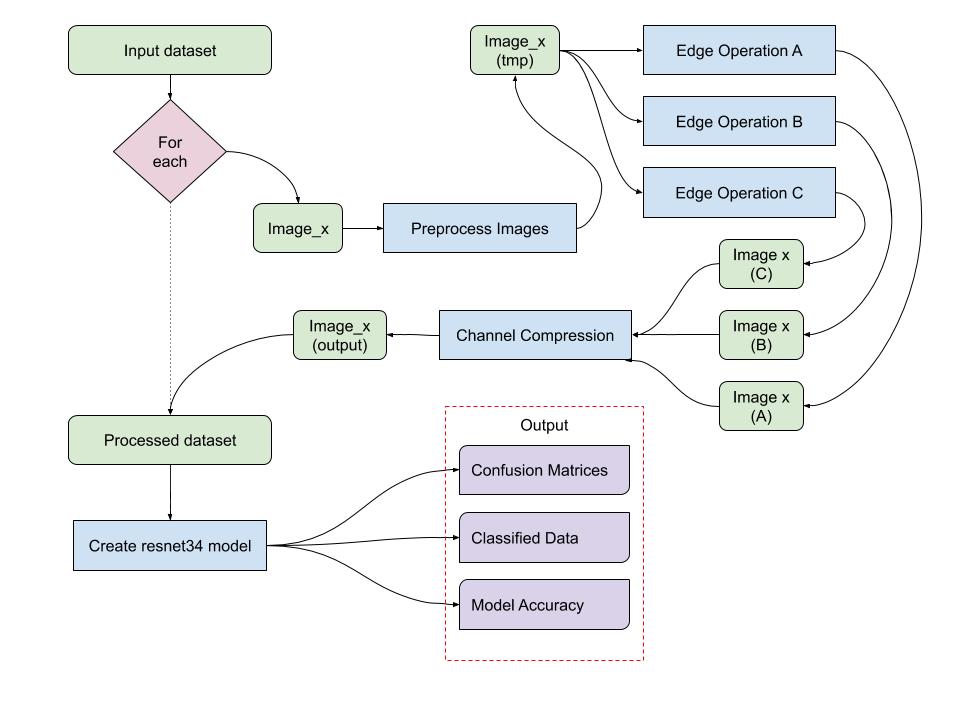

My project is about extracting features from images. Using low-cost collection techniques such as satellite imagery or drone surveys, a database of positive and negative cases can be created. Additional information will be extrapolated from each image in the database using a combination of modern algorithms and combined back into a single imager as different colored layers of a JPEG image. These processed images, the goal of which is to provide as much information as is possible, are used to train a machine learning model. Hypothetically, the additional information provided by the edge detection algorithms will enhance the accuracy and reliability of the machine learning model, reducing the need for expensive surveying equipment.

CS488 – week 5 – Update

In the last week, I have spent more time learning about using fastai with a convolutional neural network, specifically the resnet34 and resnet50 models, which I think will be ideal for my purposes. I have also been working with Jordan to get the modules for this set up on Lovelace.

I have also been scavenger hunting for more data. I have data from Iceland, and a few confirmed spots on campus which I can use for both positive and negative training cases, but more data is better for this kind of AI. My search has lead me to reach out to Tom Hamm, Greg Vaughn, The Earlham Library, and the Geology department.

Over the next week, I will continue learning about fastai and start implementing my model to be trained over the data that I have already.

CS488 – Week 4

This week I did a lot of research and work on the more anthropological side of my project. I emailed Tom Hamm and Greg Vaughn and got some great information about where I could find the foundations of old buildings around campus that I could use for my project. This information will hopefully be detailed enough for me to create some labeled training images.

I also spent some time this week learning fast.ai, which I have settled on for now as the best option for identifying images. The library is extensively documented, and extremely robust. As soon as Layout or a similar machine is back up, I will be able to start testing code, but for now, learning the library is just as important.

cs488 – Week 3

This week was a big planning week for me. I spent a lot of time writing down notes and ideas, as well as researching the details of what I need for my project. I also spent some time gathering resources for my project in the form of data from Iceland. A combination of 2018 and 2019 data will provide me a much-needed training/testing case.

I have progressed in my implementation, further streamlining the process of creating various edge detections of original images. This week I added the Prewitt edge detection algorithm and improved my Caney edge implementation to have a tight, wide, and auto mode.

I have also been researching technologies for image recognition via machine learning with multiple channels. This is the idea that a single “object” in the AI can have multiple images associated with it, and it is necessary for my project.

CS488 – Week 2

In the past week, I have spent most of my capstone time organizing my project and testing some options for the machine learning component. I have been working with fast.ai and ImageAI python packages, trying to set up some groundwork for when I have data ready.

I have also organized all the algorithms that I want to try, at least until after I can compare some results (after I see the results, I may opt to implement more)

My hope for the next week is to make progress on acquiring training data with drones, or at least narrow down where I might want to survey.

CS488 – Week 1 Update

This week has been mostly organizational for me. I found some more resources on Github that I want to try and make use of, and I worked on my design plan for implementation. I talked with Igor about technologies I can use, and what I might need to use them effectively.

The main obstacle right now is the amount of structure that my project requires, which is why I am taking my time to create a solid plan for how things will connect to one another.

Next week, as my design becomes concrete, I will start coding different segments of my project, using some of the preliminary work I have done as a guide.

CS388 – Week 13 Update

In the past week, I have been working mostly on my presentation and

my proposal. My proposal is close to a finished state, but I am still

working on collecting preliminary results. I have also been trying to

create new figures (images and charts) which are easier to read on

printed copies of my proposal.

For the implementation itself, I am still working on the things I outlined in the first section of my project timeline (setting up the pipeline of the project without adding all the features at each stage), to try and get a minimum version working. I think that this will take a couple more weeks, but I am hopeful that it will lead to me having some buffer time next semester during my implementation of the project.

CS388 – Week 12 – Update

In the past week, I have used my peer review from Jordan, as well as my own proof-reading of a physical copy of my draft to fix a lot of errors. I wrote my draft in a bit of a rush, and as a result, there were a lot of formatting errors, most of which I have now fixed. I have also updated some of my diagrams in accordance with feedback I have received and expanded some content in my draft that needed to be clarified.

In addition to working on my draft, I have been working on my project itself (preliminary work can be found in the git repo). I created a mockup GUI to give me some ideas about how I want to design the actual version next semester, as well as testing some implementations of different filters, operators, and edge detectors. Some of these results will hopefully be represented in the next version of my draft.

CS388 – Week 5 – Update

For my first idea, I have been researching methods and corner and shape detection that I might be able to apply to my implementation. I found a very relevant paper about shape detection using VLI and NIR imagery from drones, which, while new and not especially

For my second idea, I have been researching different classification methods for audio. Both of the papers I found this week use a metric called Mel-Frequency Cepstral Coefficients, in both KNN and DNN algorithms. I think, having read over these papers, I will continue to search more specifically for research related to this concept.

For my final idea, I have found two papers that discuss the obfuscation and user controls necessary to make geo-location safe, while still preserving its usefulness. One of these papers also discusses a

CS 388 – Week 4 – Update

For this week I tried to narrow down my research scope; I talked with Dave about my ideas, and where I might begin with researching each of them. I got some good ideas about previous work and what might work well to back up my project ideas, and then used that information to pick what I think were the six more relevant papers.

CS388 – Week 3 – Update

As suggested by Charlie, I chose a new direction for my third idea:

Name of Your Project? Geosocial Weight Algorithm

What research topic/question your project is going to address? Lots of social networks, including, for example, one that I created with my classmates last year, use geolocation services to sort data. Our social network uses location to not only sort and tag data, but to filter what is available to the user.

What technology will be used in your project? Location APIs, React Native (assuming that I build on to the pre-existing app we have built), Geosocial algorithms.

What software and hardware will be needed for your project? Requirements might include access to Apple devices for development and testing, such as a mac/macbook (could use one in hopper, or another lab), and some version of iPhone for testing, if making a mobile app is the most effective.

How are you planning to implement? Assuming I continue to work on the same basic network that we already began to set up, my implementation would continue in react native. We already have the basic framework of a social networking app, but I think it would be a good foundation on which to apply my ideas for geosocial algorithms

How is your project different from others? What’s new in your project? My main idea for this is to create an algorithm which can efficiently update the viewable radius of a post based on the feedback from other users in the area. This algorithm would have to take many things into account, such as the population density, user density, post density, and how much positive and negative feedback should affect the geographic radius of each post.

What’s the difficulties of your project? What problems you might encounter during your project? Programming in react-native can be a bit of a challenge. This algorithm could be very complex, so it might get tricky to work with.

After updating my ideas, I picked out 5-7 articles for each of my ideas, quickly finding an abundance of relevant material. I also set up a meeting at the writing center this weekend to discuss how to get started writing, and I will start reading into the articles I found soon. I plan to try and meet with Charlie again soon to discuss my strategy moving forward, and to talk about the quality of the sources I aquired this week.

CS388 – Week 2 – Three Ideas

Idea 1

- Name of Your Project? Corner Finder AI

- What research topic/question your project is going to address? Can deep learning and/or neural networks be used over a set of imagery to identify rectilinear structures which might be undetectable to the human eye (in other words, structures that are buried, or very obfuscated).

- What technology will be used in your project? Drones and drone imagery, as well as machine learning concepts like deep learning with training data and neural networks.

- What software and hardware will be needed for your project? I would like to make this project so that it can be used with the data the IFS trip collected from Iceland this summer. This also gives me an advantage with testing/learning data, as we created quite a stockpile of the kind of drone imagery I would like to analyse.

- How are you planning to implement? I plan to use python, or another language to create the software for this, possibly with support from some libraries (depending on language).

- How is your project different from others? What’s new in your project? From my first hand experience and preliminary research, it seems that most image analysis like this is done through direct processing of data, rather than by training an AI. The scripts we have used are mostly filtering the data that is there, rather than analyzing patterns. I think that this different approach might help us find things we wouldn’t find otherwise.

- What’s the difficulties of your project? What problems you might encounter during your project? The main difficulty will likely be the complicated nature of this kind of software. I have a medium level of experience with AI concepts, but something at this level will undoubtedly get murky with details eventually.

Idea 2

- Name of Your Project? Scatter Box

- What research topic/question your project is going to address? Is there an analysis based solution for organizing sounds based on their parameters? Can it be applied to music creation / production / organization? Can it be applied to scientific data? Can it be made efficient enough to be practical? Can it be abstracted to be broader; perform the same analysis over images, or text. In many ways, what I want this to be is a user-friendly, abstract K-nearest-neighbor classifier.

- What technology will be used in your project? Audio Manipulation algorithms, maybe other analysis algorithms for things other than audio (pictures, text), 3D data plotting.

- What software and hardware will be needed for your project? Libraries that include processing ability like Numpy and Pyaudio, 3d mesh plot output (matplotlib has a simple version of this).

- How are you planning to implement? I plan to use python, or another language to create the software for this, possibly with support from some libraries (depending on language).

- How is your project different from others? What’s new in your project? There are many software options for organizing and evaluating sounds. What makes this project stand out is the idea of visualizing the data in terms of the actual parameters of the audio. These parameters could be anything from audio length, to pitch or frequency, and more could be added throughout the course of the project, as testing made it clearer which were the most beneficial to the purpose of the project.

- What’s the difficulties of your project? What problems you might encounter during your project? There could be problems with processing efficiency, as well as with the actual output of the program. I haven’t worked much with programming visuals, let alone 3d visuals.

Idea 3

- Name of Your Project? Earlham CS Info Tool / Resource Concatenation Tool

- What research topic/question your project is going to address? The CS department at Earlham has a huge range of resources available to students through the cs.earlham.edu website. Many of these resources, however, are somewhat buried. Taking myself for example, I didn’t find out about some of the available resources, such as the CS wiki, until after I was a Junior CS major.

- What technology will be used in your project? Android Development Environment,Cross-platform Development Environment (Maybe React Native), Web Environment (Javascript, Node, React), Crawl and catalog specific websites for information.

- What software and hardware will be needed for your project? Requirements might include access to Apple devices for development and testing, such as a mac/MacBook (could use one in hopper, or another lab), and some version of iPhone for testing if making a mobile app is the most effective.

- How are you planning to implement? I plan to use a platform-agnostic framework, such as React Native, to provide as broad of support as possible to students.

- How is your project different from others? What’s new in your project? All the information to provide students with the help they need is there, but it needs to be connected in a simple, front-facing user interface. My project would provide a single, unified way to search the information made available to students in CS. The project would (in theory) be able to search all the resources, including the CS website, as well as the CS wiki, portfolio page, and any other resources. From a single search bar, the app would be able to return a list, sorted by relevance or most recent, of all the matching information from each one of these resources. My side goal for this project is to create a more abstract version of the concept, capable of applying the same combined search to a user-defined set of web pages and resources.

- What’s the difficulties of your project? What problems you might encounter during your project? I have little experience with crawling web pages for data, which could be a big part of the implementation of the project.

CS388 – Week 1 – First Idea

Name of Your Project? Corner Finder AI

What research topic/question your project is going to address? Can deep learning and/or neural networks be used over a set of imagery to identify rectilinear structures which might be undetectable to the human eye (in other words, structures that are buried, or very obfuscated).

What technology will be used in your project? Drones and drone imagery, as well as machine learning concepts like deep learning with training data and neural networks.

What software and hardware will be needed for your project? I would like to make this project so that it can be used with the data the IFS trip collected from Iceland this summer. This also gives me an advantage with testing/learning data, as we created quite a stockpile of the kind of drone imagery I would like to analyse.

How are you planning to implement? I plan to use python, or another language to create the software for this, possibly with support from some libraries (depending on language).

How is your project different from others? What’s new in your project? From my first hand experience and preliminary research, it seems that most image analysis like this is done through direct processing of data, rather than by training an AI. The scripts we have used are mostly filtering the data that is there, rather than analyzing patterns. I think that this different approach might help us find things we wouldn’t find otherwise.

What’s the difficulties of your project? What problems you might encounter during your project? The main difficulty will likely be the complicated nature of this kind of software. I have a medium level of experience with AI concepts, but something at this level will undoubtedly get murky with details eventually.