Here’s the final draft of my paper to finish off the semester:

Last Program Update

Yesterday, I just changed the name of the executable file to “konductor” simply because I wanted the command to be a little more descriptive over just “main,” and all related files have also been updated to reflect the change. Unless something else happens, this will probably be my last program update for the semester.

Meanwhile, today is all about finishing my 2nd draft so that I can work on other projects due tomorrow.

v12.8

I’ve been sick for the days I wanted to do the poster, so it’s substantially lower in quality than I wanted it to be and I haven’t submitted it for printing yet. I intend to do so before noon tomorrow.

Also because of illness, I have no other progress to report. Timeline:

- Finish the poster tonight (R)

- Submit the poster for printing tomorrow (F)

- Revise the paper for the last time (S)

- Poster presentation (T)

Most of that is either on schedule or only slightly behind. The part of the project that will be set back most by this is the software component, which is in good enough shape that it is now lower priority than all of the other items.

Daily work log 11/28/16

work on running shell script to curl api url, also running them through arduino program

Daily Work Log [12/04 – 12/07]

I have been mainly working on polishing up my presentation. I prepares the slides, rehearsed and gave some finishing touches to Robyn.

Daily work log 11/17/16

work on testing access to arduino once online, ssh ing to the arduino and running commands

Daily work log 11/16/16

Connecting Arduino Yun to internet, huge pain, arduino cant connect to ECsecure and trouble connecting to ECopen

Daily work log 11/15/16

work on organizing hardware to be less messy and more compact,

Daily work log 11/29/16

Finalizing front-end application

Daily work log 11/30/16

first day of presentation

Daily work log 12/1/16

Work on backend api, building out the update function to take arguments

Daily work log 11/14/16

Final soldering day, adding safety sheaths to protect against electrocution

Daily work log 11/11/16

practice soldering with craig

Daily work log 11/10/16

Conversation with charlie on how to get stranded wires into bread board, began work on soldering with help of craig early

Daily work log 11/9/16

work on api and front end web application

Daily work log 12/2/16

work on placing appliance into container given by charlie, trying to curl command to work, runshellcommand not working in program

Daily work log 12/5/16

started work on presentation, finalizing curl working from inside arduino program

Daily work log 12/6/16

Work on presentation slides and presentation

Daily work log 12/7/16

Final presentation day, finalized slides and prepared presentation

v12.6

I met with Charlie this morning. Due to feeling increasingly sick throughout the day I haven’t finished the poster as planned, but I know what needs to be added on Thursday – just a few hours of work on that day, print either that afternoon or first thing in the morning Friday.

Progress on Paper and Program

Today, I tried getting rid of the sleep function from OpenCV that watches when a key has been pressed so that the program can quit safely. This is the only sleep function left in the program that could possibly interfere with the timekeeping functions in the program, and keyboard control is not necessarily a key part of the program either. However, I couldn’t find any other alternatives in the OpenCV reference; I was looking for a callback function that is invoked when a window is closed, but that doesn’t seem to exist in OpenCV.

Nevertheless, I have been continuing to work on my paper and add the new changes to it. Although, I don’t know if I’ll have time to finish testing the program before the poster is due on Friday.

Daily log 12/4

- Created the databases and trained the classifieds for each emotion. Next step is to calculate the accuracy of each classifier.

Second Draft Complete

Wanted to update to make that clear.

I hope that my only requirements in the final version relate to style and language: adding the appropriate academic structure, rephrasing sentences and paragraphs, maybe moving something here or there.

I hope not to need additional content, though it’s possible that some section will need an extra paragraph or two.

It’s certainly enough for the poster.

Work Update [12/1 – 12/4]

I have been extensively working on improving the front end of Robyn’s web interface and the Natural Language Processing aspect of Robyn. Trying to give some finishing touches to Robyn before the demo this Wednesday.

Paper Update

The paper was my project for the weekend. I had intended it to be the final version, in order to be done with it entirely before exams and other projects came due, but I will instead consider this version the second draft and submit it to that slot on Moodle.

More specific status of the paper:

- 6 pages, including references and charts

- Wording is still shabby. I’ve emphasized completing the page count and choosing the correct scope, and therefore left wording and flow problems to the final draft.

- I updated the flow diagram to include arrows and better logical grouping of objects and information.

- I incorporated examples of interface design from Apple and Nextdoor.

- I expanded the description of the software, its current state, and what it could become in the future.

- I expanded the section on the social significance of HCI and behavior changes.

- In the final version I will also revise the abstract, move sections around, and remove the cruft. The revision is the time to subtract, not add.

It’s a good second draft. It would be a bad final draft.

This week I will accomplish three things:

- Complete the final draft no later than Sunday night.

- Complete the basic software and clean up its git repository.

- Create the poster, lifting much of the content from the paper and building on a library template.

Looking forward to others’ presentations on Wednesday.

Demo Video and Small Update

Before I forget, here’s the link to the video of the demo I played in my presentation. This may be updated as I continue testing the program.

Today’s update mainly just consisted of a style change that moved all of my variables and functions (except the main function) to a separate header file so that my main.c file looks all nice and clean. I may separate all of the functions into their own groups and header files too as long as I don’t break any dependencies to other functions or variables.

Progress Update

Over Thanksgiving break I have learned how to compile and modify the software for ffmpeg in Cygwin, a virtual Unix environment. This makes the process of modifying and compiling the code easier. Furthermore, I have been developing and working on implementing algorithms to use the conclusions from my experiment better choose how many keyframes to allocate for different types of videos. I have also made the full results of my experiments available here.

Question 30

John Doe

555 merrly lane5.0

Jack Doe

555 merrly lane3.0

Jane Doe

555 merrly lane4.0

Work Log[11-27 to 11-30]

I did not do much work during Thanksgiving since I was travelling a lot. However, after coming back from the break I have spent a good amount of time everyday working on my senior sem project. I have especially been working making a web interface for the python script of Robyn. Since I had not done much work with python web frameworks, I had to learn quite a bit about these. After looking at several options like Django, CGI, Flask and Bottle, I decided to use Bottle since it has a relatively low barrier to learning and since it is light weight, which is sufficient for Robyn and makes the system easier to setup as well. Today I finished the plumbing for the web interface, as well as the front end for Robyn.

Now, for the next couple of days I will work on the NLP part of Robyn.

Post-Presentation Thoughts

Today’s program update was all about moving blocks of code around so that my main function is only six lines long, while also adding dash options (-m, -f, -h) to my program as a typical command would.

Looking through the XKin library for a little bit and after having done my presentation, I’ll soon try to implement XKin’s advanced method for getting hand contours and see if that helps make the centroid point of the hand, and thus the hand motions, more stable. In the meantime, though, my paper is in need of major updates.

v11.30

Notes post-presentation, including a few features I need to complete the base version of the code:

- Ran long but that’s fine.

- Need the URL’s input by the author to be written to file

- Need to choose start URL’s from a file

- Need to produce a randomized-choice start URL for the user

- (Database may add overhead, for now focusing on text files)

Now I’m putting the project down until this weekend, to get some other work done. This weekend I will complete the software and paper. If there’s time I’ll do the poster.

v11.29

I am shortly going to complete the presentation for this class and a quiz for another, but I made massive progress today. It is not perfect but it has enough functionality for a demo tomorrow (though because it’s not perfect I’ll spend some time tomorrow afternoon practicing).

What it can do:

- works as a developer extension with basic functionality: has an icon, proper structure, several web files, and no privacy-compromising information

- logs messages to the JavaScript console (more verbose if the developer enables debug output)

- publicly accessible on gitlab

The core functionality deserves its own bullet points:

- on activation of the extension, start time is recorded

- on each new page, gets the URL

- compares the URL to each line in a file of acceptable stop URL’s

- if there’s a match, stop and send a message to the console with a timestamp of how long it took to get there

What I may still be able to do between now and tomorrow’s class but can’t guarantee:

- author inputs stop URL’s, those are written to a file by JavaScript for comparison

- start_time begins upon clicking the icon rather than upon activation (this should also change the icon in some way to show the state change)

- rename on gitlab so it isn’t known now and forever as “craig1617”

See my git commit messages here.

I’m not sure how much more work I’ll get done on this software this semester. I hope more, because it’s got a lot of promise and a good foundation, but I would be satisfied with this for purposes of the course grade given my other academic constraints.

Before Testing

I forgot that I also needed to compose an actual orchestral piece to demo my program with, so that’s what I did for the entire evening. I didn’t actually compose an original piece, but rather just took Beethoven’s Ode to Joy and adapted it for a woodwind quintet (since I don’t have time to compose for a full orchestra). Soon, I’ll actually test my program and try to improve my beat detection algorithm wherever I can.

I also tried adding the optional features into my program for a little bit, but then quickly realized they would take more time than I have, so I decided to ditch them. I did, however, add back the drawing of the hand contour (albeit slightly modified) to make sure that XKin is still detecting my hand and nothing but my hand.

Daily work log 11/28

Have made progress in training cascades for different emotion. Meeting with Dave tomorrow to determine implementation methods.

v11.28

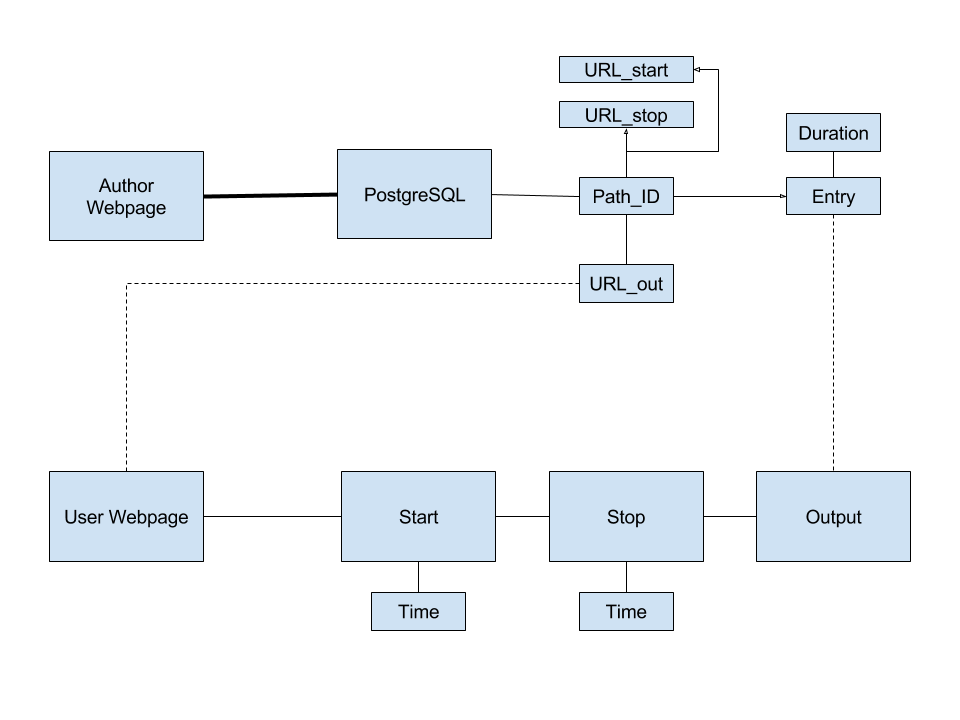

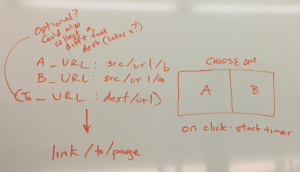

I solved multiple issues just by walking through this diagram …

… and it’s not even complete, I suspect. Subject to examination tomorrow morning.

Progress relative to what I anticipated: basically good. Details:

- I process text from forms and can populate and reset those forms. I can open URL’s and produce timestamps (and therefore time intervals). The basics, in other words, are done.

- I know a whole basket of potential URL problems that will need to be solved in future iterations of the software.

- The page’s appearance is plain but not completely offensive.

The biggest problem is that it does not (to use my metric from yesterday) “work, start->finish.” I’m planning to spend tomorrow much like today, except more emphasis on coding and testing. I will produce my presentation in the evening and demo what I have (which I will consider the final version, for purposes of this course) on Wednesday.

Also here’s a silly throwback to when I was initially doodling that diagram in Jot! for the iPad.

v11.27

Thanksgiving is over. I exercised my prerogative to take a break and did no work in the last week. I also chose to give my final presentation this week, so I have a firm timetable that prioritizes this project (both code and paper) for the next week.

To wit:

- Sunday, 11/27: Implement the basic JavaScript functions for submitting and processing URL’s; working on using Date.now() in JavaScript for time-stamping

- Monday, 11/28: Create an electronic and more detailed version of the flow diagram in the morning, make the code actually work, start->finish, in the afternoon

- Tuesday, 11/29: Ask Charlie questions in the morning; finish what’s left over; in the ideal world, I would spend the afternoon just getting the aesthetics right, but I suspect complications will lead to a fairly sparse interface (ironically, for an HCI project)

- Wednesday, 11/30: Final presentation, no further work on the software for this course anticipated

- Saturday and Sunday, 12/05 and 12/06: Finish the paper – read and write Saturday, revise Sunday, submit as soon as it’s available

There are a number of pitfalls I can foresee, and the reasons I would fall short of completion by Wednesday would most likely be because one of the following proved more complex than anticipated:

- Moving from a web tool to a Chrome extension

- Parsing URL’s

- Data collection and storage

- Timers

- Exception handling

In short, this week will be mostly devoted to this class (and the following two weeks will emphasize others).

Core Program Complete!

Yesterday was a pretty big day as I was able to implement FluidSynth’s sequencer with fewer lines of code than I originally thought I needed. While the program is still not completely perfect, I was able to take the average number of clock ticks per beat and use that to schedule MIDI note messages that do not occur right on the beat. I may make some more adjustments to make the beat detection and tempo more stable, but otherwise, the core functionality of my program is basically done. Now I need to record myself using the program for demo purposes and measure beat detection accuracy for testing purposes.

Two other optional things I can do to make my program better are to improve the GUI by adding information to the window, and to add measure numbers to group beats together. Both serve to make the program and the music, respectively, a little more readable.

Learning to Schedule MIDI Events

The past few days have been pretty uneventful, since I’m still feeling sick and I had to work on another paper for another class, but I am still reading through the FluidSynth API and figuring out how scheduling MIDI events work. FluidSynth also seems to have its own method of counting time through ticks, so I may be able to replace the current timestamps I have from <time.h> and replace them with FluidSynth’s synthesizer ticks.

Configuring JACK and Other Instruments

Today, I found out how to automatically connect the FluidSynth audio output to the computer’s audio device input without having to manually make the connection in QjackCtl every time I run the program, and how to map each instrument to a specific channel. The FluidSynth API uses the “fluid_settings_setint” and “fluid_synth_program_select” functions, respectively, for such tasks. Both features are now integrated into the program, and I also allow the users to change the channel to instrument mapping as they see fit as well.

Now, the last thing I need to do to make this program a true virtual conductor is to incorporate tempo in such a way that I don’t have to limit myself and the music to eighth notes or longer anymore. Earlier today, Forrest also suggested that I use an integer tick counter of some kind to subdivide each beat instead of a floating point number. Also, for some reason, I’m still getting wildly inaccurate BPM readings, even without the PortAudio sleep function in the way anymore, but I may still be able to use the clock ticks themselves to my advantage. Although, a simple ratio can convert clock ticks to MIDI ticks easily, but I still need to figure out how I can trigger note on/off messages after a certain number of clock ticks have elapsed.

Another Feature To Add

Yesterday, I changed the velocity value of every offbeat to make them the same as the previous onbeat since the acceleration at each offbeat tends to be much smaller and the offbeat notes would be much quieter than desired. This change, however, also made me realize that my current method of counting beats may be improved by incorporating tempo calculations, which I forgot about until now and is not doing anything in my program yet. The goal would be to approximate when all offbeats (such as 16th notes) would occur using an average tempo instead of limit myself to trigger notes precisely at every 8th note. While this method would not be able to react to sudden changes in tempo, this could be a quick way for my program to be open to all types of music and not just those limited to those with basic rhythms.

Late Minor Update

Yesterday, I only had time to make relatively small changes to my program, but I did write more of the readme in more detail explaining how to use the program. Even more will be explained as I finish developing my program.

Also, I figured out that the reason for the bad points showing up so often was that part of the table was mistaken to be part of the hand, so I moved the Kinect camera a little higher, and sure enough, that issue was fixed. Now the only two important issues I have left to figure out are how to change the instrument that is mapped to each channel, and how the user will be allowed to change said mapping to fit the music. I may also try to figure out how to automatically connect the FluidSynth audio output to the device output of the computer, but I’m not completely sure if this is OS-specific.

Daily Work Log [11-16]

I finished my draft yesterday and I am proofreading it today before submitting it. I am still also looking into integrating python scripts into a webpage.

Getting FluidSynth Working

In a shorter amount of time that I thought, I was not only able to add FluidSynth to my program, but also able to get working MIDI audio output from my short CSV files, although I had to configure JACK in such a way that all of the necessary parts are connected as well. For some reason, though, I’m seeing a lot more stuttering on the part of the GUI lately and the hand position readings aren’t so smooth anymore, and the beats suffer as a result. I’ll try figuring out the cause soon, but now I have a paper to finish.

v11.15

I am spending today (Tuesday) writing the paper. I have now written all section and subsection headers that I need into it and started incorporating useful bits of the proposal as a baseline.

In an effort to produce a working version of the software, the following are my goals for the rest of the day:

- paper: fill out the sections and revise what already exists there

- software: complete a bit of php code to interface with PostgreSQL, write starting html, create flow diagram

This means that the following will be delayed for the second draft and the poster:

- paper: add content, specifically expanding the Related Works section

- poster: import content from paper, adjust form

- software: a complete working base case

The final version of the paper will be a revision of the second draft, in which I will remove cruft rather than add content. The software version as of December 12, when the second draft is due, will be the last version of the software I work on for grading purposes in this class.

I intend to work minimally over break, so what I complete this week is likely all I will complete before the return the following week.

Daily Work Log [11-14]

Working on the draft. Also worked on reorganizing my software directory for better structure.

v11.13

I did a little more reading this weekend. Tomorrow (Monday) I’m occupied with meetings and classes, but I’m dedicating all day Tuesday to concluding my readings, completing the first draft, and (I hope) finishing iteration zero of the software.

Because of recent disruptions I am not keeping pace with my imagined progress from a few weeks ago, but Tuesday should catch me up enough that I can return from break and complete everything satisfactorily.

Daily Work Update [11-13]

I have been working quite a bit on my program. I set up what I think will be my primary database for info related to diseases. I have also been updating my github to reflect the updated state of my code. I ran into issues where the sql script I was using was based of MySQL and had many syntax that is incompatible with SQLite3 which is my db server. After working on it and through several processes I was able to fix the script and create the DB.

Minor Improvements

I briefly removed the timestamps from my program, but I didn’t notice any change in performance any more, so I just left them in the program as before. I also made my program a little more interesting by playing random notes instead of looping through a sequence of notes, and changed the beat counter to increment every eighth note instead of every quarter note. The latter change will be important when I finally replace PortAudio with FluidSynth.

I also played around with the VMPK and Qsynth programs in Linux to refresh myself on how MIDI playback works and how I can send MIDI messages from my program to one of these programs. Thanks to that, I now have a better idea of what I need to do with FluidSynth to make Kinect to MIDI playback happen. I also plan to have a CSV file that stores the following values for the music we want to play:

beatNumber, channelNumber, noteNumber, noteOn/Off

The instruments that correspond to each channel will have to be specified beforehand too.

More Experimentation

The past few days have been really rough on me, as I attended the Techpoint Xtern Finalist reception all day yesterday, all while being sick with a sore throat and cold from the freezing weather recently. On a positive note, I used my spare time to continue writing my rough draft, so there wasn’t too much time lost.

Back to my program, I’ve added a number of features/improvements to it, the first one being adding timestamps for every recorded position in the hand so that I could actually calculate my velocity and acceleration using time. I did notice that the “frame rate” of the program dropped as a result, so I may try to reduce the number of timestamps later and minimize their usage. I also made sure that all of the points that fall outside the window are discarded to lessen the effect of reading points nowhere near the hand. This also means checking the distance between two consecutive points and discarding the last point if the distance is above some impossible value. I also use distance to make sure there are no false positives when the hand is still, so that the hand has to move a certain distance in order to register a beat. A number of musical functions have also been added for future use, such as converting a MIDI note number to the corresponding frequency of the sound.

Work log 11/12

Got facedetect.cpp to compile correctly. Now working on testing it with data provided by openCV.

Daily Work Log [11-10]

I have been working on trying to find database for diseases for Robyn.

v11.10

I didn’t accomplish anything the first few days of the week. Today I want to read some more sources and finalize the paper topic. I may start incorporating the survey paper into the draft, so I can start building up the page count.

Daily Work Update [11-9]

I have been working on my bot. I decided to name my bot Robyn. I also created a repo in my github (github.com/arai13) for Robyn and have started taking snapshots on a regular basis.

Tempo Tracking and More Searching

Yesterday, as suggested by Forrest, I added the ability to calculate the current tempo of the music in BPM based on the amount of time in between the last two detected beats. It doesn’t attempt to ignore any false positive readings, and it doesn’t take into account the time taken up by the sleep function, but it’s a rough solution for now.

Now, the next major step I am hoping to take with this program is to use the beats to play some notes through MIDI messages. I am searching for libraries that will allow me to send MIDI note on/off messages to some basic synthesizer, and FluidSynth looks to be a decent option so far.

Update 11/8/16

I am continuing the tests that I mentioned in the previous update and adding them to the graph.

Update 11/7/16

I spent Monday running more tests with ffmpeg to get better data. I am now forcing a specific number of prediction frames over a regular interval.

Daily work log 11/8/16

Today I open up the extension cord to see what I was going to be working with. I expected two solid pieces of copper instead, I found many very skinny pieces. Will need to consult with kyle about how to go about working from this point on.

Daily work log 11/7/16

Data collection and work with data to determine the exact resistor values I will need for the circuit, paid close attention to power dissipation

Daily work log 11/3/16

Communicated with kyle to work on circuit board online with circuit.io, made good progress

Daily work log 11/4/16

finalized circuit board online

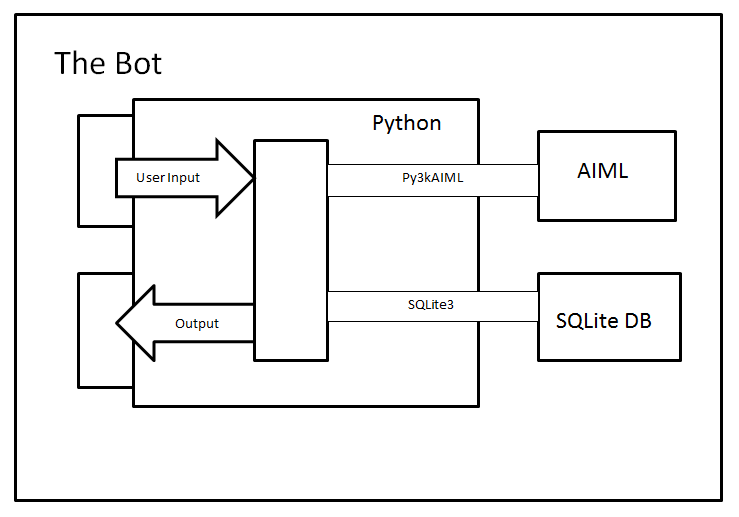

Daily Work Log [11-7] and Design

So after playing around and exploring for a bit, I have finally chosen my final set of tools for the project. I will be using Python, AIML, SQLite with Py3kAIML and sqlite3 libraries. I was able to finish the plumbing and now have a very basic bot that can listen to the user, fetch data from the SQLite database and print the result. Now that I have the main tools I will be using, the design of the system will be the following:

Fun with PortAudio and Next Steps

Today, I added the ability to change the volume of the sound based on the acceleration value, or how quickly the hand is moved, as well as change the frequency of the sound and thus change the note being played using a simple beat counter. I also noticed that the beat detection works almost flawlessly while I make the conductor’s motions repeatedly, which is a good sign that my threshold value is close to the ideal value, if there is one.

Now that the beat detection is working for the most part, the next thing I need to do is to figure out how to take these beats and either: a) convert them to MIDI messages, or b) route them through JACK to another application. Whichever library I find and use, it has to be one that doesn’t involve a sleep function that causes the entire program to freeze for the duration of the sleep.

November 2 – November 7 Weekly Update

November 2 – November 4

Since I figured that understanding Android development would take more time than I expected, I decided to speed up the development process by using Cordova as my development platform. I installed Cordova on my computer and started integrating the Wikitude API into it.

November 5 – November 6

I took an actual campus tour on the family weekend to see how the guides walk the guests through the campus of Earlham and see from a visitor’s perspective. It is really helpful and I collected some information for the application.

November 7

I started working with GPS locations and Image recognitions.

v11.7

Nothing new from this weekend. Today I’m considering the IO of the URL’s for the software and, if that goes well, writing the code to create the visual interface. I’ll outline the paper and gather final reading material tomorrow. At the end of the day tomorrow I’ll post a comprehensive update.

Daily work log 11-7

Downloaded Android Studio to begin learning Android development.

Daily Work Log [11-6]

I’ve been working on making an outline for the first draft of the paper.

Audio Implementation

I added the PortAudio functions necessary to enable simple playback as well as revised my beat detection algorithm to watch for both velocity and acceleration. My first impressions of the application so far is that the latency from gesture to sound is pretty good, but I noticed that the program freezes while the sound is playing (due to the Pa_Sleep function which controls the duration of the sound), which freezes the GUI, but could potentially mess up the velocity and acceleration readings as well. False positives or true negatives in the beats can also occur depending on the amount of threshold set, and the detection algorithm still needs more improvement to prevent them as much as possible.

Daily work log 11-05

Created cluster account and got the sample files from OpenCV copied to the cluster. Need to learn how to use qsub to compile programs.

Daily Work Log [11-5]

I am still looking into setting up the architecture with Python.

Current Design and Next Steps

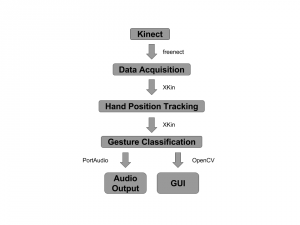

Here’s the current version of the flow chart of my program design, although it will surely be revised as the program is revised.

I’ve also been thinking about how exactly the tracking of velocity and acceleration is going to work. At a bare minimum, I believe what we specifically want to detect is when the hand moves from negative velocity to positive velocity along the y-axis. A simple switch can watch when the velocity was previously negative and triggers a beat when the velocity turns positive (or above some threshold value to prevent false positives) during each iteration of the main program loop. The amount of acceleration at that point, or how fast the motion is being done, can then determine the volume of the music at that beat.

v11.04

For the last couple of days I’ve been walking through a simple Chrome extension, HexTab, the source code of which is open and published on GitHub. The functionality is nothing like mine, but it has the virtues of …

- being simple and open-source

- relying on just a few JavaScript/HTML/CSS files and therefore being easily adaptable

- being well-organized (in contrast to a few others I’ve looked at)

- obeying the Chrome naming/organization guidelines to the best I understand them (it occurs to me that those guidelines may be worth mentioning in the HCI context as well as Apple’s)

- being complete enough to build from

I would like to start hollowing out this code soon, but I’m spending a while reading the code first.

Work log 10/29/16-11/4/16

I have spent the last week split up between 3 different tasks: Starting to chart the twitter ER diagram, following the O’Reilly Social Media Mining book to continue to learn about harvesting through APIs, and reopening my database systems textbook to remind myself how views work.

After speaking with Charlie last week, we discussed the possibility of using views to select relevant tables from the larger Facebook and Twitter structures to create a model that was easily modifiable and a combination of the two existing models, rather than trying to force the models themselves together into a new, heavily set model.

Work on the Twitter model is coming along, and I hope to be done by the end of this week.

Update 11/3/16

I spent today reading through more of the documentation for ffmpeg to learn more about its structure and the commands it supports.

Daily Work Log [11-3]

I have been working on the outline for the draft.

Daily work log 11/2/16

Went to home depot and found a very help person who was knowledgeable in electronics. I have decided to use an extension cord as the backbone of my non-invasive device. I will plug both the power source of the Arduino and the washing machine into the extension cord, then open up the extension cord to read the voltage of the washing machine. I am working and talking to kyle about different technique with resistors to bring the voltage down to a readable amount and then using the Arduino’s built in 0-5V reader to read the voltage. Began working with online bread board and Arduino simulators to begin testing.

Quick Update

Small update today since I have other assignments I need to finish.

I implemented a simple modified queue that stores the last few recorded positions of the hand in order to quickly calculate acceleration. I also learned a bit more about the OpenCV drawing functions and was able to replace drawing my hand itself on the screen with drawing a line trail showing the position and movement of the hand. Those points are all we care about and that makes debugging the program a little bit easier.

v11.02

Presentation went well today. No project updates, except that I’ve decided that I hope to complete a working software version by the end of November so I can focus on the paper during December. (This will not interfere with completing the first draft by class in two weeks.)

Update 11/2/16

I have placed some of my early data in various spreadsheets. I am continuing the process of collecting data, and am ready to use my the information I have so far and observations I have made to start the first draft of my paper.

Update 10/30/16

I have been gathering more data to find the optimal number of keyframes for various types of videos. The videos with larger file sizes take a long time to compress.

Daily Work Log [11-1]

I have decided to implement the AIML, Python, MySQL architecture and have been looking at setting up an environment to run them all.

Daily work log 11/1/16

Couldn’t get OpenCV to install properly on my laptop so I asked the CS admins to create a cluster account for me. By sshing to the cluster I will be able to use OpenCV. Tomorrow I expect to get the facedetect sample to compile and run. From there I can start working on implementing the emotion code.

Compiling the Program

After a good amount of online searching and experimentation, I finally got my Makefile to compile a working program. There is no audio output for my main program yet, but I am going to try out a different beat detection implementation that bypasses the clunky gesture recognition (namely tracking the position of the hand and calculating acceleration), and hopefully, it will result in simpler and better performance.

Daily Work Log 11/1/16

Heading to home depot to talk to someone knows about voltage splitters or where else to measure voltage from. Heres hoping someone knows something.

Daily Work Log 10/31/16

Just wrapping the wires around the cord, doesn’t work, neither does attaching the wires to the prongs of the plug. I am thinking about going to an electrician or home depot to find someone who knows where to measure the voltage

Daily Work Log 10/28/16

With measuring voltage figured out, i have moved on to determining where to attach the wires to measure the voltage.

Daily Work Log 10/27/16

work on voltage monitoring, found 2 ways to determine voltage, the first measures 0-5V, the second measures higher voltages using voltage dividers and multiple resistors.

Testing PortAudio

I don’t know what took me so long to do it, but I finally installed PortAudio so that I can actually use it in my prototype program. To make sure it works, I ran one of the example programs, “paex_sine”, which plays a sine wave for five seconds, and got the following output:

esly14@mc-1:~/Documents/git/edward1617/portaudio/bin$ ./paex_sine PortAudio Test: output sine wave. SR = 44100, BufSize = 64 ALSA lib pcm.c:2239:(snd_pcm_open_noupdate) Unknown PCM cards.pcm.rear ALSA lib pcm.c:2239:(snd_pcm_open_noupdate) Unknown PCM cards.pcm.center_lfe ALSA lib pcm.c:2239:(snd_pcm_open_noupdate) Unknown PCM cards.pcm.side bt_audio_service_open: connect() failed: Connection refused (111) bt_audio_service_open: connect() failed: Connection refused (111) bt_audio_service_open: connect() failed: Connection refused (111) bt_audio_service_open: connect() failed: Connection refused (111) Play for 5 seconds. ALSA lib pcm.c:7843:(snd_pcm_recover) underrun occurred Stream Completed: No Message Test finished.

I’m not entirely sure what is causing the errors to appear, but the sine wave still played just fine, so I’ll leave it alone for now unless something else happens along the way.

Now that I have all the libraries I need for my prototype program, all I need to do next is to make some changes to the demo program to suit my initial needs. I’ll also need to figure out how to compile the program once the code is done and then write my own Makefile.

Halloween Update

Accomplished since 10/28 post:

- Completed the IRB form, pending approval by Charlie (most likely it will need revised but could be submitted by class on Wednesday)

- Drew a design of the minimal version of the program, more comprehensive design pending

Next to work on:

- Augment the minimal design by class on Wednesday

- Produce the presentation for Wednesday’s class

- Resume research and reading, the bulk of which I will probably do late in the week

- Find JavaScript code for an existing (open-source) extension, save it, and hollow it out to serve as the foundation for the Chrome extension

Daily Work Update [10-31]

I worked on an architecture for my program which is based on AIML with Python and MySQL in the backend.

Daily Work Update [10-30]

I have been looking at different ways to integrate a database into AIML

Update 10/30/16

I’m still trying to get the libraries for OpenCV installed in order to compile facedetect.cpp.

Update 10/29/16

Continuing to gather data to evaluate ffmpeg.

Update 10/28/16

Continuing to work on gathering data for the speeds and compression ratios of ffmpeg.

Update 10/28/16

Currently still trying to compile the OpenCV facedetect.cpp file from the samples directory. I keep getting an error saying it cannot locate the libraries in the OpenCV.pc file. I am trying to get this resolved as soon as possible so I can use that program and begin working on the emotion detection portion of the project.

Kinect v1 Setup

The new (or should I say, old) Kinect finally arrived today, and plugging it into one of the USB 2.0 ports gives me the following USB devices:

Bus 001 Device 008: ID 045e:02bf Microsoft Corp. Bus 001 Device 038: ID 045e:02be Microsoft Corp. Bus 001 Device 005: ID 045e:02c2 Microsoft Corp.

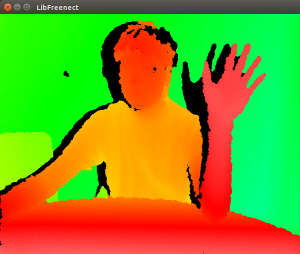

. . . which is still not completely identical to what freenect is expecting, but more importantly, I was finally able to run one of the freenect example programs!

This is one-half of the freenect-glview program window, which shows the depth image needed to parse the body and subsequently the hand. I then dived into the tools that the XKin library provides, helper programs that let the user define the gestures that will be recognized by another program. With some experimentation, along with re-reading the XKin paper and watching the demo videos, I found out that the XKin gesture capabilities are more limited than I thought. You have to first close your hand to start a gesture, move your hand along some path, and then open your hand to end the gesture. Only then will XKin try to guess which gesture from the list of trained gestures was just performed. It is a bit of an annoyance since conductors don’t open and close their hands at all when conducting, but that is something that the XKin library can improve upon, and I know what I can work with in the meantime.

Work Log 10/19/2016-10/27/16

I spent this week charting out ER diagrams for a Facebook database schema. A lot of this work involved converting DDL statements I found online into a class diagram, and understanding how the classes related to each other. I am now at a point where I understand the entities and their relationships, and the next step is figuring out which of these entities I care about for my project.

I have also been using Mining The Social Web. This book is an overview of data mining popular websites such as Twitter, Facebook, and (interestingly as a social media site), LinkedIn. It even touches on the semantic web and the not-so-popular Google Buzz. Each area is covered with explanations on how to set up programs, a brief introduction to and explanation on the workings of the API, some examples of mining code and a couple of suggestions on how to use it.

I plan to use the data I am learning to harvest through these APIs to test and iteratively hone my data model. I’m currently working on charting out the ER diagram for Twitter, although this is proving trickier than it’s Facebook counterparts because I’ve only been able to find fragments of the model in different places.

v10.28

Catching up after traveling last week, I focused mostly on procedural bits for the project: opening an Overleaf project for the paper and getting the formatting/section headers right, researching Chrome extensions, and drawing some diagrams (about which more later).

I will focus on building up the paper for the next week. I also need to complete the IRB form (I’m about halfway through now) and the software design, which I intend to do by the time of the next class. Again see here for detailed timeline.

Update 10/27/16

I have begun work on testing how long it takes ffmpeg to compress certain files, and how effectively it compresses files at certain key frame sizes.

I have also been working on compiling the program’s source code so I can work on modifications, but I haven’t yet succeeded at that.

Chapel IO module

Currently working on tagging the individual data fields in each message entry, and saving the newly tagged tweets to a new directory.