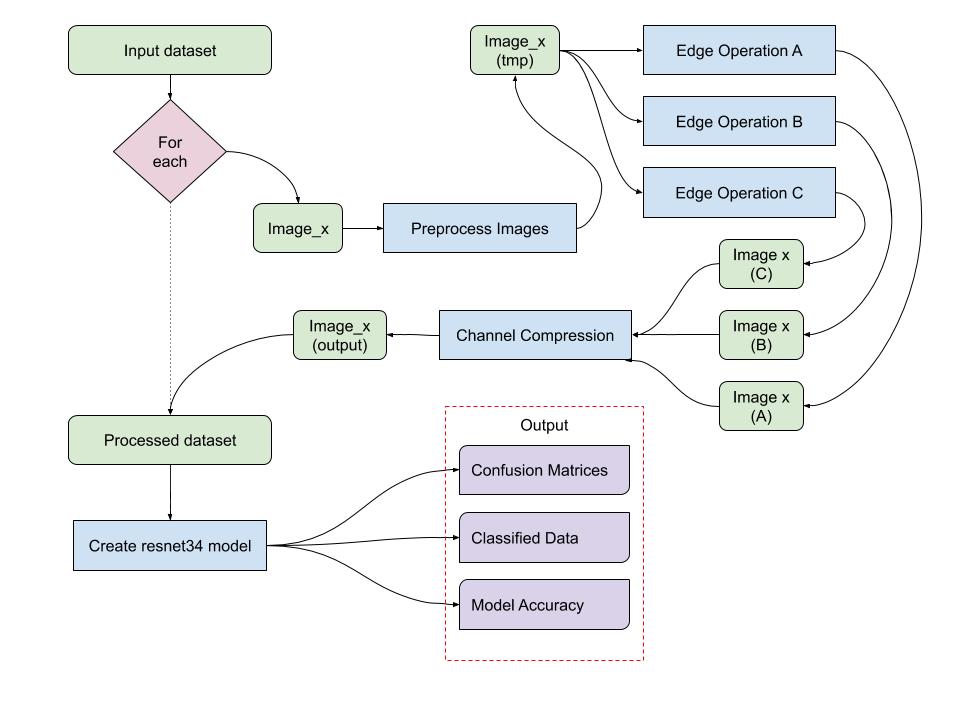

My project is about extracting features from images. Using low-cost collection techniques such as satellite imagery or drone surveys, a database of positive and negative cases can be created. Additional information will be extrapolated from each image in the database using a combination of modern algorithms and combined back into a single imager as different colored layers of a JPEG image. These processed images, the goal of which is to provide as much information as is possible, are used to train a machine learning model. Hypothetically, the additional information provided by the edge detection algorithms will enhance the accuracy and reliability of the machine learning model, reducing the need for expensive surveying equipment.

CS488-Week8-Update

I am currently working on a proof that non-contiguous kPARKS is NP-Complete. I am also mostly done with my paper.

CS488 – Software Architecture Diagram

Please click on the title above to view my flow diagram.

CS488 – Week 8

This week I focused more on refining my idea and how it would flow for a user, which then helped me to create a flow diagram for this week. During this process, I realized some flows in my code were inefficient, so I changed the flow of information through certain functions to match up with my flow diagram.

I created a validation function to test a loaded model and also an argument parser to make it easier to pass values for different and important variables into the code.

CS488 – Week 7 – Updates

I developed the feature extraction module for my project and it is working. It now converts a voice input file (.wav) to a sequence of acoustic feature vectors. I tested with my own voice. The two files of my voice recording produce two very different sequences of vectors. But I think we cannot tell my looking at these numbers. They are just a list of numbers of the .wav file. I am still having bugs on my modeling module. I followed Charlie’s suggestion to learn TensorFlow from the basic. I build and trained a model with TF’s dataset and it worked. But this is just a basic try. I will keep looking at it.

CS488 – Week 6 – Updates

Last week CS was down so I couldn’t post my week6 updates. I finally finished the environment setting for my modeling module code. I am using a model called VGGVox Models which are created by the same authors of the dataset I am using. I almost gave up this resource because it is written in Matlab which I have never used before. But then I found a python resource guiding me how to import this model. However, I am still having bugs running this model. It says the true_fn and false_fn have different data types. I tracked the error and found that the error is in TF innate files which i cannot modify. But I don’t know which step that I pass data incorrectly.

Elevator Pitch

My senior project is to develop a technology that provides higher performance and security for target applications. It is called unikernel which is an optimized library operating system. Unikernel consists of the minimum set of components that a target application requires from a complete operating system. Unikernel is light weight and has higher isolation than containers. It will be the trend of running environment for applications in many fields such as cloud computing and high performance computing in the near future.

CS488 – Week 7 Updates

In the past week, I worked on creating a survey to take inputs for content-based filtering, modified the skin type test questions, and obtained some responses. I also worked on implementing non-content-based filtering using TF-IDF which I am struggling with. I will be meeting with Xunfei on Thursday and try to finish this part as soon as possible.

CS 488 – Elevator Pitch

My project aims to create a skincare product recommender system based on the user’s skin type and ingredient composition of a product. The main component of the project is content-based filtering and the secondary component is non content-based filtering. For content-based filtering, a user provides his or her skin type and selects a skincare product from sephora.com. The system then identifies the chemical components of products and uses cosine similarity to recommend products that have similar ingredient compositions. 5 recommendations for each product category are then made and returned to the user. Non content-based filtering allows users not to input the product if they lack knowledge or have not found a product they like. A user provides his or her skin type and desired beauty effect to obtain top 5 product recommendations across all 6 categories.

CS488 – Elevator Pitch

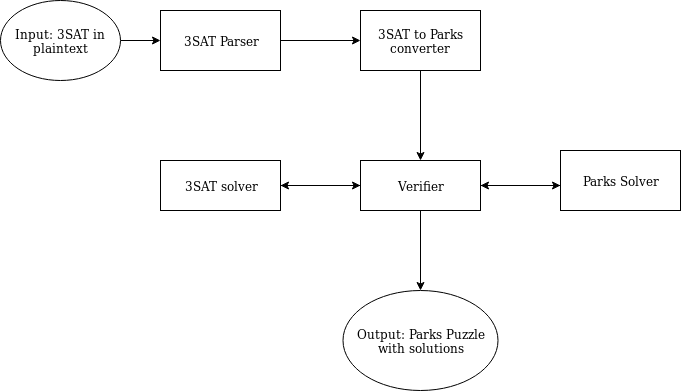

Parks Puzzle is a popular puzzle game that is played on a square grid. A Parks Puzzle consists of an nxn grid with contiguous regions known as parks. The aim of the puzzle is to place trees within parks such that every row, column, and park contains one tree, and no two trees are on squares that border one another. My senior proposal is to show that the associated problem of deciding whether a given configuration of a Parks puzzle is consistent with a solution or not, dubbed PARKS, is NP-Complete. This result lets us meaningfully state that the Parks Puzzle in general is an NP-Complete problem, and that it is highly unlikely that there exist any polynomial time algorithms for the problem. Since I have already found a proof, I am currently working on the kPARKS problem, which is the analogous problem of placing k trees in each row, column and park.

CS488-Week7-Update

Now that I have a proof for the parks puzzle, I am spending time working on a more general puzzle that we’ve dubbed kPARKS, which is the analogous problem of placing k trees in every row, column and Park. I am also working on writing the proof and the results that build up to the proof in a clean and concise manner.

CS488 – Week 7

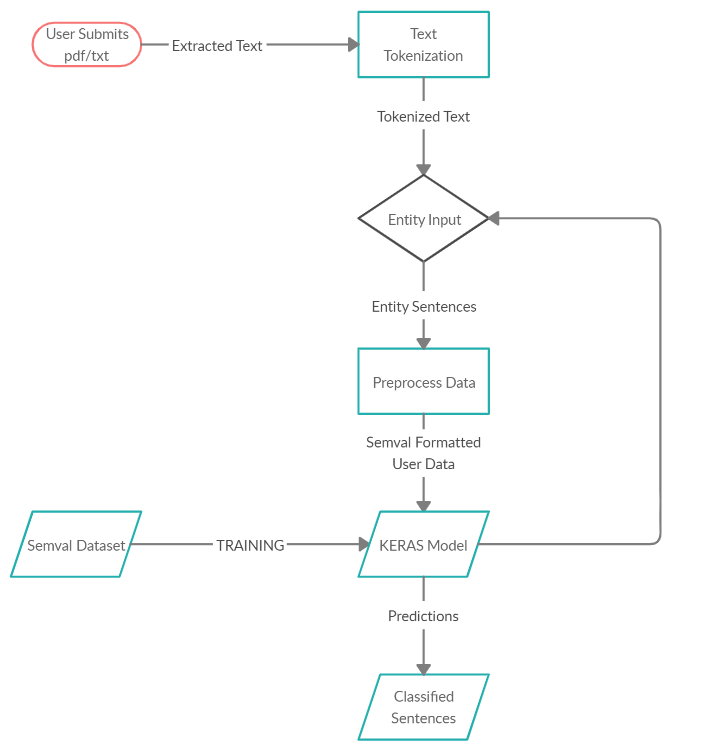

This week I began creating a model using the Keras Python library. I have been training it on the SemVal Task 8 2010 dataset, with accuracies of around 90% during training and 5 epochs and 60-65% validation accuracy. I was successfully able to save and reload the model.

I will be working on increasing the accuracy of this model in the coming week before applying it outside of its dataset.

CS488 – Elevator Pitch

My project aims to see how applicable semantic relation extraction models are outside of their dataset. Semantic relations are how we draw knowledge and facts from a text and no text is the same and when we research we usually look for these relationships regarding certain subjects in the text important to us. I want to see if a normal user can use state-of-the-art semantic models outside of their dataset to decrease the time needed to find specific knowledge about any entity in an unstructured text.

CS 488 – Week 7 – Elevator Pitch

My project aims to develop a reproducible penetration test that can help secure a large network. Tests will come from three different avenues- physical and technical testing, as well as social engineering. The results from these tests will be put together in a final report and given to the appropriate people who can make appropriate changes as needed.

CS 488 – Week 6

— Elevator Pitch —

My project aims to use a sequence to sequence encoder-decoder model to make text-based advertisements more engaging and readable. This will help businesses get an edge over their competitors by attracting new customers as well as retaining their existing customers by making sure that their advertisements are readable and engaging to their target audience. This will be done through the analysis of pre-existing advertisements which will then be used to train the model on how to restructure sentences to make them more readable and engaging.

CS 488 – Week 7

My project is an application used in a library to issue and return books using QR code. The primary usage of this app is in college libraries. Using personal smartphones, users can scan the QR code and check out the books which reduce human work and reduces the average time spent in the library. Users can also search for any book in the library and learn basic information rapidly.

The login data and book data is stored in a firebase database. The librarian application involves 3-4 staff users who can manage the flow of books through the app and when a fraud activity takes place they get notified.

CS 488 – Update – Week 6

- This week I focused on getting part of the PolicialNews Data set from Castello et al. to work with Weka to be able to see if I can recreate the results used by their classification methods

- Downloaded a tool to combine excel files into one sheet without data loss, manually added headers and an extra column denoting which was fake and which was real

- But Weka still won’t load the data so that I can test it

- Next week I will focus on making smaller versions of the data set to see what features are the issue for Weka and testing features individually; I will also look into Keras as a machine learning tool and see what kind of testing can be done

CS488 – Week 6

This week I was trying to run two different TensorFlow models with checkpoints, however, I could get the checkpoints to work which is key to my project. After a discussion with Dave, I have decided to implement a simple model myself using the Keras library since it is more abstracted and well documented, so it shouldn’t take too much time. I will be aiming for a minimum of 50% accuracy with my model and the SemVal-2010 Task 8 Dataset, which I think is the best dataset choice for this task.

Following this implementation, I want then start testing my model outside its dataset.

Week 6

I did not manage to get the foreground (the food) with zero user interaction. I have achieved pretty good success at picking just the food with minimal user interaction. I will try it with a pure white background next.

I can now successfully manipulating individual contour areas.

For next week, I will (finally) work on learning more about food photography.

I I have most of the standard functionality working. Pretty much the only untouched coding is the resizing.

CS488-Week6-Update

This week I worked on finalizing my proof for the IFF and OR gadgets, and rewriting the final proof for the paper draft. I am now planning on working on an explicit algorithm for the reduction for use in the program.

CS 488 – Week 6

This week I started working on writing the first draft of the paper. The models seemed to run probably when I tried to test with the small data set. However the accuracy was not what I wanted since there were not enough data. I will tried to implement these models on an online cloud computing system while waiting for Layout to be available.

CS 488 – Week 6

Completed the paper outline. Working on the first draft as per the feedback received on the outline. Plan to complete the draft this week and present the first version of the project next week. I am still working on the QR code generation and scanning.

CS 488 – Week 5 – Updates

I wrote the outline of the senior project paper. It is similar to my proposal but i changed my modeling method. i need to rewrite the modeling from GMM-UBM to Convolutional Neural Networks. But I am still having bugs on the CNN resource I gained from GitHub. I am pretty sure I will use MFCC as feature extraction. But if i cannot get proper resource of CNN I probably need to change to DNN or others. But I will try my best cuz I want to use CNN.

CS488 Week 5

This week, I focused on writing the outline of the paper. I also have been using NLTK to extract features from the text reviews. I need to figure out how to handle a review that has multiple sentences since my feature extraction is currently only applied to one sentence.

CS488 Week 3

For this week, I followed a tutorial from Educative about Natural Language Processing and followed for data preprocessing. I was able to load the dataset and I am in the process of preprocessing it. I ran into a problem of sentences that do not contain any emotions at all, therefore need to be eliminated. Also, I need to extract the word ‘not’ from words such as ‘don’t’, haven’t’ for better indication of a negation. In the following week, I shall try to do so

CS488 Week 2

For the past week, I was able to skim through the Amazon Review datasets and chose Clothing, Shoes and Jewelry, on the criteria that its size is not too much. I then researched and learnt how to use pandas to import the dataset from its json type into a DataFrame. In the following week, I will continue to research and find out the way to extract the review of the data into multiple feature of interest that will be use to train our ML models with.

CS488 – week 5 – Update

In the last week, I have spent more time learning about using fastai with a convolutional neural network, specifically the resnet34 and resnet50 models, which I think will be ideal for my purposes. I have also been working with Jordan to get the modules for this set up on Lovelace.

I have also been scavenger hunting for more data. I have data from Iceland, and a few confirmed spots on campus which I can use for both positive and negative training cases, but more data is better for this kind of AI. My search has lead me to reach out to Tom Hamm, Greg Vaughn, The Earlham Library, and the Geology department.

Over the next week, I will continue learning about fastai and start implementing my model to be trained over the data that I have already.

CS488-Week5-Update

The proof has been completed. This week I will work on writing it out rigorously, as well as designing the program.

CS 488 – Week 5

This week, I focus on the writing parts including the outline for the capstone paper. I also start implementing some neural network models for the image data set such as MobileNet and EfficientNet. I will try to test the model using a small sample training data set while waiting for Layout to be available so I can train the whole large data set.

CS 488 – Week 5

I finished writing my initial model and have trained it using a small sample dataset. The accuracy of my initial model is quite bad. I am currently researching how I can modify and improve the accuracy of my model as well as downloading a larger dataset to increase the accuracy. I also worked on the outline of the first draft of the final paper. Currently, the obstacle is the lack of accuracy of my model. This next week I will work on finishing up the first draft of the final paper as well as increasing the accuracy of my model.

CS 488 – Updates – Week 5

- I found a data set that would be the easiest to recreate results with

- I just need to merge the data set of credible news and the data set of non-credible news with an added column denoting whether it was real or fake to be able to test

- However, I ran into a major hiccup because Weka crashed and I can no longer open it.

- I am documenting the errors and trying to reinstall it and fix but because for some reason I can’ t delete some of the old folders and so I still have not gotten Weka to start back up again

- My hypothesis is that one of the packages is failing the whole opening process because I don’t have R on this machine

- I am also afraid of force deleting the folder because I have no idea how it will affect Weka or my computer

- I did successfully complete my outline but I do not have enough information to fill out the Results section and anything regarding my exact methodology.

CS488 – Week 5 – Updates

In the past week, I found and fixed a bug for cosine similarity calculation in the code I was referencing. I was able to obtain more accurate recommendations across different product types. I also thought about and planned for next week’s task.

Week 5

I am trying to succesfully detect the foreground object in the image, which will be food. While the initial idea was to use AI to detect the food in the image, this is not a completely solved problem yet. Since I can choose my own input, using the foreground image makes more sense. Charlie recommended a software called Image Magick, and I will look at that.

For next week, I want to finish the foreground, and start resizing the images, and passing them to the ranking AI.

CS 488 – Week 5 – Update

This week I spoke with Charlie and gained clarity into how Earlham handles guest connections through ECOpen and from there does not allow access to the network within Earlham but simply gives access to the outside internet. I also started my Social Engineering test on Thursday. I ran into some speed bumps with that and am currently resolving. For this next week, I plan to talk to Aaron in ITS about NMAP and its use to possibly check for any ports that are left open and could be vulnerable. I plan to meet with him sometime early next week.

CS488 – Week 5

This week I first worked on creating an outline for my final paper, which was useful as it sharpened my current understanding of my project and where it is headed. I also was working with a new model and was able to successfully train it, save checkpoints and load them. I also created basic pre-processing functions for my data to match the format of input sets.

Loading of weights did seem to not work with this model. When I reached a checkpoint with 80%+ accuracy and saved the weights, I followed up with loading the weights and feeding in test data from the dataset, but accuracy dropped to 5%. This was extremely confusing and is my priority to understand this week otherwise I will have to find another model.

CS 488 – Week 5

User login and authentication is complete. New user is able to sign and save details in the database. Sample set of 20 books to be used as testing data. Currently I am figuring out QR code scanning and generating new QR code’s. Next week scanning will work and books can be scanned and retrieved from DB.

CS 488 – Week 4 – Updates

TensorFlow is working fine on Lovelace now. But I just found that the demo uses TensorFlow 1 while the latest version installed on Lovelace is TF2………. The demo has a lot of code. I am not sure if i should work on this one and update all codes to TF 2, or just find another resource…… I talked to Xunfei, she told me to try other resource briefly. Because update that demo is not a small work.

CS488 – Week 3 – Update

I am still having issue on running the demo code from GitHub. I requested installation of TensorFlow in python3 on lovelace but it seems there’s still error. It is probably the issue of environment setting. I will communicate with the admins.

TensorFlow is working fine on Lovelace now. But I just found that the demo uses TensorFlow 1 while the latest version installed on Lovelace is TF2………. The demo has a lot of code. I am not sure if i should work on this one and update all codes to TF 2, or just find another resource…… I talked to Xunfei, she told me to try other resource briefly. Because update that demo is not a small work.

CS488 – Week 4

This week I did a lot of research and work on the more anthropological side of my project. I emailed Tom Hamm and Greg Vaughn and got some great information about where I could find the foundations of old buildings around campus that I could use for my project. This information will hopefully be detailed enough for me to create some labeled training images.

I also spent some time this week learning fast.ai, which I have settled on for now as the best option for identifying images. The library is extensively documented, and extremely robust. As soon as Layout or a similar machine is back up, I will be able to start testing code, but for now, learning the library is just as important.

CS 488 – Week 4

Last week I worked on collecting and preprocessing the data using Groupon API. I also started learning about and implementing my autoencoder model. So far the obstacle has been the learning curve but I have been extensively reading about neural networks and Keras and should be able to continue working on the project without any hiccups. Next week I plan to start my first draft of the paper as well as have a somewhat working version of the autoencoder model.

CS 488 – Week 4

This week, I started the data preprocess for my image data. The steps include, resizing, cropping, normalizing and lastly change to tensor value so that it can be fit in a neural network. For the numerical data set, I started looking into different algorithms which are not as computationally expensive as neural network such as k-nearest neighbor, support vector machine. In that way, I can test it when Layout server is still not available.

Week 4

I finished the ranking module. It take a folder of images, converts them to an array, passes the n best images to another function, which keeps processing the images, and then picking the best n again to be processed. There is no processing yet.

For the processing, I have started working on the genetic pixel changing for the image processing. I am reading the pixels into an array, and am changing each individual pixel. While it is (sort of) a genetic algorithm, I want the changes to be a little more intentional.

CS488-Week4-Update

This week I took a short break from working on the proof to start working on the app. I am currently trying to figure out whether it is worth designing the Parks app as a webapp, while also starting work on some of the basic modules.

CS 488 – Updates – Week 4

- I have spent this week analyzing the data sets that I have to see if there are any outside things that I need for these data sets to be able to be tested using Weka.

- I have found that some required me to have my app registered with Facebook Developers and Disqus and some were not actually in proper .csv format and so Weka (the tool that I am using to test classification methods) could not read it.

- This meant that I have a lot smaller pool of articles that I am able to replicate.

- I have found 27 different data sets but I haven’t read all the papers those data sets are used in and some of the papers that mention the data sets are just explaining how they created the data sets and not how to use them in this context.

- Because of all of these little setbacks, I am working on just finding smaller sample data to test Weka with, so that I can make sure Weka is working and I am focusing on recreating the results from Castello et al.’s work for the moment.

- Castello et al.’s data format is different than what I have used for Weka before and I have to do some more digging to see if I need to combine the fake news data set with the credible news data set for each year first before sending it through Weka, or if I can just open both within Weka and tell it how to find what it needs.

CS 488 – Week 4

In the past week, I worked on generating five recommendations from each of the the six product categories. I still have a confusion about the cosine similarity formula so I’m planning to meet with other faculties in the following week while keep working on the next task. Other than that, there wasn’t any obstacle and I just need to make the function return the results in a nice and clean way.

CS488 – Week 4

This week I made efforts to get predictions from my model that was trained last week. However, after some hours spent understanding the code, I realized that this model is not for practical use but rather theoretical predictions, as each query set requires a supporting set.

Following this setback, I have now found some models to train from a smaller dataset in comparison to FewRel. I believe these models are able to be used practically on random query sets. With the smaller training time required for them, I should be able to verify which is best for my project this week.

CS 488 – Week 4 – Update

This week, I continued my testing for the physical aspect of my project. During this testing, I tried to focus on ECOpen since it says there is no encryption associated with the network. Come to find out, there is still an authentication process that one must go through when trying to connect to ECOpen. So when I ran a packet sniffer on a device that was on an ECOpen channel, I could not see any data. (This is a good thing and was noted). I also finished preparing my social engineering test which will begin tomorrow, February 12th. This next week will consist of my social engineering test, processing results from physical test, and working on my paper.

CS 488 – Week 4

Working on the first draft of the paper.

Week 3

I have started working on passing images to the ranking algorithm.

I also have found some online food-photography courses I want to look at. Learning that will be helpful in knowing how to improve my images.

Update up to 2/5/2020

This past month i have been mostly working with getting everything for my project working and fighting some major issues. The first issue that has been almost solved is that the NorthStar uses DisplayPort out while my computer only has an HDMI port and a MiniDisplayPort in. Turns out HDMI outputs are not compatible with DisplayPort and so the adapter i got to do that does not appear to work. I am investigating getting the proper port by the end of this current week.

The other issue i had to fight was the fact that for a week and a half, i did not have my primary computer since it was broken and needed to be repaired. I had a much less good backup computer that i used to test the hypothesis above, so i was not entirely useless during that time.

This coming week and weekend, i am hoping to have a fully functioning environment running and have the ability to display tracked hands in the headset, depending on the availability of an adapter.

CS 488 – Week 3 – Updates

Last weekend, I spent time with a small group of friends filling out a spreadsheet of information for 2020 Senate candidates. So far, 154/348 filed candidates have been added to the sheet. During that time, we discovered that a few candidates operate their campaign on a public Facebook profile instead of a Facebook page. In talking with Charlie, he guessed that the process in the API to collect profile data shouldn’t be too different from page data. Therefore, I am planning to collect this data as well, while noting the names with profiles in case their results are drastically different from overall results. Next, I plan to develop the scripts to start collecting and analyzing small amounts of data, planning to scale and automize them later.

cs488 – Week 3

This week was a big planning week for me. I spent a lot of time writing down notes and ideas, as well as researching the details of what I need for my project. I also spent some time gathering resources for my project in the form of data from Iceland. A combination of 2018 and 2019 data will provide me a much-needed training/testing case.

I have progressed in my implementation, further streamlining the process of creating various edge detections of original images. This week I added the Prewitt edge detection algorithm and improved my Caney edge implementation to have a tight, wide, and auto mode.

I have also been researching technologies for image recognition via machine learning with multiple channels. This is the idea that a single “object” in the AI can have multiple images associated with it, and it is necessary for my project.

CS488 – Week 3

This week I was able to create a saved checkpoint of my learning model for semantic relation extraction. This hopefully means I won’t need to train it further and can now focus on feeding it my data, which now needs to be pre-processed before being fed into the model. A basic GUI window was also up and running this week with PyQt5 which was great to see! I will be writing more code in the coming weeks now so I need to ensure that my project files are organized.

CS 488 – Week 3

This week, I tried to implement some models and was hoping to get it on our Layout server with GPUs. However, the system admins were still working on that and I could not ssh to the server. Therefore, I created a google cloud free trial account and started writing and testing my model on their server.

CS 488 – Week 3

Since my project involves a significant part that’s marketing, I was advised by my instructor to talk to Seth and other professors about how I should approach a dataset. After talking to them, I have decided that a good approach would be creating a dataset using the readability formulas. First I will calculate the average readability and then filter the dataset using that average readability. A marketing dataset has been extremely hard to find, but asking around has led me to the Groupon API – it lets me get 100 deals per second which will help me easily scrape millions of deals in a few days. I plan to run a script in the background that does it. Since last week, I have also successfully implemented word2vec using Genism – a python library.

CS 488 – Week 3 – Update

In the past week, I worked on calculating the cosine similarity between the ingredient composition of an inputted item and that of the rest of the items in the data. I am struggling to decide on which formula to use for this, since the related project used the equation different from the “typical” formula used to compute cosine similarity. I will need to look into this more next week.

CS488-Week3-Update

I worked with possible ways of proving that non-contiguous Parks is NP-Complete, and found one good avenue for exploration. Over the week I produced a general technique to convert any instance of 3-SAT to an instance of the non-contiguous Parks Puzzle, thus proving that it is NP-Complete, our first major result. I am working to modify the proof, or try similar techniques for the contiguous case this week.

CS 488 – Update – Week 3

- I have started to keep a log of what I do every day for this project so if something goes wrong I know where to back up and begin again. This will also help later when writing about my process for the poster/paper

- I have started mapping all the datasets I found to what papers used them so that I could figure out which papers I could replicate

- I have started trying to replicate papers as well using Weka just to make sure I’ve set up everything correctly so that I can properly set up my own tools

- I’m having issues with how vague all the research papers are, however. So I think to fix that issue, I’ll need to email the researchers which more questions so I can actually replicate them and know what tools they used.

CS 488 – Week 3 – Update

This past week I have really dug into my physical testing. Using Kali Linux and a wireless adapter (supports monitor mode), I was able to use commands to see which networks were available and from there, I could see all of the clients connected to each network. However, I only could see the BSSID (MAC Address) of each device, nothing more. I then went in to WireShark which showed me a little more data. I could potentially see what type of device it was. However, all data was encrypted in ECSecure. Trying to break the encryption was hard as we have hundred of users with different passwords. It’s not just a single password for the ECSecure network (that would be too easy to break). I plan to continue this testing and see what else I can find through ECOpen.

I have also started to set-up my Social Engineering experiment that way everything is ready when the start date arrives.

CS 488 – Week 3

I worked on the login page and setup and almost done with the forget password setup. I have to decide on the database for the login, whether it will be single data base or sql for the whole application. I plan on working on the User interface features in the coming week. This involves setting up a db to store books, setting storage attributes etc. This is the main portion of the project and shall take the most time.

Week 2

I have spent some time thinking about how to split up the timeline into more detail. I have met with Charlie, and decided that the program should take a bulk of images as an input rather than a video. The next step is to learn more on the photography aspect of things.

CS 488 – Week 2

This week I have looked at some papers of most recent models for classifying images to build for my dataset. I encountered some challenges while reading those papers since there were terms that were hard to understand. Next week, I will continue to work on the image dataset and model.

CSS 488 – Week 2

Due to a lack of available usable datasets, after talking to my advisor and instructor I decided to modify my project to focus on readability and sentiment instead. I researched papers on readability and sentiment this last week and have starting writing code using python(Keras). My next week’s goals are to have some working code for a trained network that produces more readable code. I still need to look a bit more into what constitutes as readable when it comes to marketing material.

CS 488 – Update – Week 2

- This week I recovered all of the data sets I found last semester that were on my other computer. I then downloaded and extracted the data.

- I also chose to set up my own Developer SQL database on my laptop so that I can keep my training data and the user data in one accessible place.

- Because I wasn’t able to have my mentor meeting last week, I wasn’t sure where to begin with all the work I’ve set up. So I’ve decided to go back through all of my notes on the research papers I have read and create a giant spreadsheet detailing the tools used, features used, classification methods used, whether the dataset or the code was available, and if I’ve contacted the authors of these papers for more info.

- This will help me figure out how I’ll need to create the learning loop to not forget any feature or method.

- This will also help me show my advisor exactly what was in previous work and what I have to build off of

CS 488 – Week 2 – Update

This past week, I have started phase 1 of my project, testing the physical security of the network. Along with starting this phase, I started to write the Google survey that will be used w/ the social engineering experiment. I also ordered the hardware needed for the social engineering test. I have not encountered any obstacles. This next will I will continue to use WireShark to test the physical network using both a wireless and ethernet adapter.

CS488 – Week 2

In the past week, I have spent most of my capstone time organizing my project and testing some options for the machine learning component. I have been working with fast.ai and ImageAI python packages, trying to set up some groundwork for when I have data ready.

I have also organized all the algorithms that I want to try, at least until after I can compare some results (after I see the results, I may opt to implement more)

My hope for the next week is to make progress on acquiring training data with drones, or at least narrow down where I might want to survey.

CS488 – Week 2

I forked MLMAN, a PyTorch model that achieved the second-highest accuracy of validation on the FewRel dataset for semantic relation extraction. Running locally with a useful amount of iterations, it took to long to train, so I will be training the module on hopper and saving the model there to fetch for local use. With this saved model, I hope to start pre-processing and feeding sentences into it for validation.

CS488-Week2-Update

Over this week I finished up an non-contiguous IFF and OR gadgets, however I came to the conclusion, after meeting with Igor, that there does not seem to be a way to effectively put together these two gadgets. However, we also concluded that in most cases, it is not a particularly difficult challenge to find a gadget for contiguous parks, if one already knows the equivalent gadget for contiguous parks. Since I have reached a dead end, over the next week I am going to try out one promising new direction, and hopefully by close to proving the result for non-contiguous parks within the next two weeks.

CS 488 – Week 2 – Updates

My project is to collect and study the Facebook Reactions and comments on posts by U.S. politicians to see if bias exists based on the gender of the politician. I have decided with Charlie’s advice to focus my project on the 2020 Senate races. The 2020 Presidential election doesn’t have enough candidates to be a good sample size. The 2020 House races would likely have a wide variety of candidate strategies based on the district, many districts with no competition, and less voters per race. By contrast, the Senate races have enough candidates to be a good sample size, while also having more voters per race, meaning there should be more Facebook Pages with enough user activity to be used in my dataset.

This week I found sources for the Senate races, created a spreadsheet for candidates, and decided on which relevant columns should be in the spreadsheet. I am filling out the sheet first for races where the filing deadline has passed for the primary first. Next, I plan to learn how to access the Facebook API using the Facebook SDK Python library, and to collect sample data for candidates I have already added to the spreadsheet.

CS 488 – Week 2 – Updates

I decided to change my modeling method to neural networks. I have read a paper called Text-Independent Speaker Verification Using 3D Convolutional Neural Networks and checked their resources on GitHub. I tried to run their demo but required packages couldn’t be installed on my laptop. i probably need to request a place to run on CS/Cluster from the SysAdmins. I also found other similar resources on GitHub. My next step is to run them with testing files. I also had the first weekly meeting with my advisor Xunfei to discuss timeline and future plans.

CS 488 – Week 2

I made a visualization (plot) displaying ingredient composition similarity between different products and skin types. I attached two drop-down options for users to select from product categories and skin types. I also attached labels to the graph so that it displays the product’s name, brand, price, and rank.

CS 488 – Week 2

Working on the login and sign up system. Reading existing papers that talk about such system. Sign up will be via Zimbra only since using Facebook and other applications could lead to fraud accounts. The home page would be ready by next week.

CS 488 – Week 1

I go through the project again because it has been a while since I had CS 388 last Spring. I downloaded the data set and started doing some data manipulation and preprocessing. I will start looking at the models for image data set next week.

CS 488 Week 1

It was the first week, and we had out presentations. I also found an advisor. Everything is swell.

CS488 Weekly Update(1/15 – 1/22)

For the past week, I went back to my materials in CS388 and re-read my proposal along with the research papers in the proposals. In the following week, I need to obtain the dataset and learn (at least partially), the tools/ML models I will need for the project.

CS 488 – Week 1 Update

In the past week, I loaded the data, extracted ingredients from products, and made a document-term matrix containing product names and ingredient composition. I plan to visualize ingredient similarity between products this week. I haven’t faced many obstacles yet, but I want to finish things earlier than planned to allow some time for future obstacles.

CS 488 – Week 1 – Update

- I bought a new computer over the break because my older one was unreliable and crashed unexpectedly from time to time. So I spent this first week setting up the computer and downloading the tools that I believe I’ll be using.

- I also have spent a lot of time hunting down the data sets from the research papers that I have read and have a collection of over 22 different fake news data sets.

- I created my presentation slides which helped me think about the project in a different way since I need to think about how to explain things in a way that will make sense to everyone and not just myself.

- Finally, I chose my adviser and set up a meeting time and shared notes space but we were unable to meet this week since she will be at a conference.

CS488 – Week 1 Update

This week I created the presentation for Wednesday, which helped to make clear to me my new current goal after work done over break. I have found some new datasets and repositories for models online, which I will be presenting to my advisor to figure out which best suits my project. I have also tried to better breakdown my timeline following the selection of a module for the following month, and have personal project goals. I researched some libraries for GUI implementations, currently leaning towards Electron (Java) or PyQt5 (Python).

CS488-Week1-Update

This was the first week so I worked on getting back up to speed with the research, and on creating presentations for the first class.

CS 488 – Week 1 – Update

This week was mainly for refreshing myself on the details of my project. I finalized Charlie to be my advisor for 488 and set up a weekly meeting time with him. I also completed the 3 slide powerpoint in preparation for the presentation in the joint class of 388/488. I adjusted my timeline and plan to start the first phase of my project on Monday. I did not have any obstacles this week. Within this next week I plan to start the physical testing phase of my project.

CS488 – Week 1 Update

This week has been mostly organizational for me. I found some more resources on Github that I want to try and make use of, and I worked on my design plan for implementation. I talked with Igor about technologies I can use, and what I might need to use them effectively.

The main obstacle right now is the amount of structure that my project requires, which is why I am taking my time to create a solid plan for how things will connect to one another.

Next week, as my design becomes concrete, I will start coding different segments of my project, using some of the preliminary work I have done as a guide.

CS 488 – Week 1 – Updates

First of all, I decided my advisor to be Xunfei who was my advisor as well last semester. We decided our weekly meeting time. I have read some new papers and decided to change my modeling method from GMM-UBM to Neural Networks, and combine with i-vectors or x-vectors. I have found related code sources about Deep Neural Networks/Convolutional Neural Networks for speaker verification on GitHub. GMM-UBM is one of the most classical and dominant methods for speaker verification, but its accuracy decreases as the amount of users increases. Nowadays, there are new methods performs better than it, like Deep Neural Networks/Convolutional Neural Networks. This change on my project might be more challenging because I am using a new method which probably has fewer recourses. But I really want to make the accuracy for speaker verification higher than 90%.

CS 488 – Week 1

I am getting familiar with Android studio. As per my timeline, the first step in the application is to implement the login system. Aim is to decide by end of this week whether to use Firebase and SQL or only SQL. I have to speak to Charlie regarding this. I revised my project through the first presentation, submitted the advisor form. Next week, work on the application should begin!

CS388 Final Proposal

Finished the final draft of project proposal.

CS 388 – Week 16 – Update

I submitted the final proposal for my project. It is attached with this post.

CS 388 – Week 15 – Updates

I just worked on my final paper this week. I met with Xunfei to ask questions about it.

Week 15

I have been working on my final proposal this week. I will post it after the deadline for the assignment.

CS388-Week16-Update

I finished my final proposal and I’m rechecking everything for submission this week.

CS388 – Week 13 Update

In the past week, I have been working mostly on my presentation and

my proposal. My proposal is close to a finished state, but I am still

working on collecting preliminary results. I have also been trying to

create new figures (images and charts) which are easier to read on

printed copies of my proposal.

For the implementation itself, I am still working on the things I outlined in the first section of my project timeline (setting up the pipeline of the project without adding all the features at each stage), to try and get a minimum version working. I think that this will take a couple more weeks, but I am hopeful that it will lead to me having some buffer time next semester during my implementation of the project.

CS388 – Week 13 – Update

I finished my presentation. My next step is to add abstract and more introduction to my proposal paper, and finish the final version of it. I have done more research in the past week and planed to change my modeling method from GMM-UBM to Convolutional Neural Network or Deep Neural Network. GMM-UBM is very classical but also “old-fashioned”. CNN and DNN are newer and better. GMM-UBM’s performance lowers as the amount of speakers increases. But I do not have enough time to change method for this semester. I will do more research during winter break and probably change next semester.

CS 388 -Week 15 – Updates

I have researched and read a few more papers in the last week. I have expanded upon my analyze -> split -> replace modules with actual implementation details using an encoder-decoder model to swap less engaging text with more engaging text. In order to do this, the text needs to be vectorized and then trained. I have also found a module that can help me achieve that. I have also extensively worked on my proposal presentation. I also met with my advisor and went over the presentation and was advised to explain the slides in a way that a person with no understanding of neural networks can understand what is being communicated.

CS 388 – Week 14 – Updates

- I spent the vast majority of this week looking for projects that have specifically detailed how they implemented a fake news detector and reading through the articles I’ve already found.

- While some have given a lot more detail on their process, unfortunately, I can’t understand some of the details.

- A lot of the details go into the mathematical aspects of machine learning and convolutional neural networks. That’s very difficult for me because math is not my strong suit.

- I will either have to find a tutorial that will actually explain it well or I might have to compromise my big goals for this project. I need help finding papers or tutorials that clearly explain their processes so I can move forward in the way that I want to.

CS 388 – Week 14 – Updates

This week I have been working on my presentation and have refined my proposal a bit. I will keep working on my presentation until the upcoming Wednesday as I wait for feedback on my second draft of my proposal

Week 14

This week I have worked on the presentation. That’s it.

CS 388 – Week 14 – Update

This week I worked on the final proposal. Feedback from the second draft indicated that there were some grammar and structure errors. I added the testing and abstract sections. I hope to finish the proposal by the end of this week.

CS 388 – Week 13 – Updates

- I focused this week on fixing my first proposal.

- I re-did all of my diagrams so that they would use the proper shapes

- I re-wrote my design section

- I add more to my introduction to better explain the importance and the gaps

- I elaborated about the timeline and gave a high level overview by month

- I also did research into the postgres database using SQL because that seems like the best tool for my project.

- Next week over the break, I hope to go more in depth into my readings and start to finalize the tools I want to use

CS388 – Week 12 – Update

I read some new papers and research about different modeling algorithms and started to worry about the accuracy on my system. The accuracy is not only rely on the modeling but also based on the dataset for training and the quality of acoustic input (the speaking environment). But selecting a suitable modeling algorithm is important. Now the popular models are: HMM, VQ, DTW, GMM, UBM, i-Vector. I temporarily chose hybrid GMM-UBM. I might change in the future or mix other modeling to enhance the accuracy. My goal is to reach an accuracy at least 90%.